Introduction

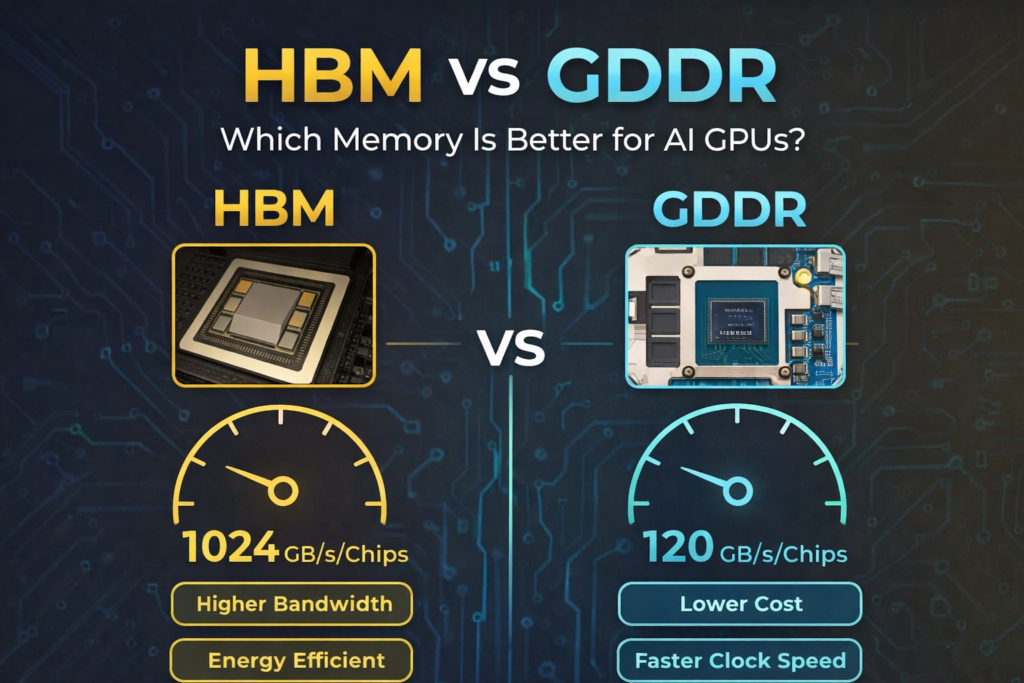

The difference between HBM and GDDR matters because AI performance depends as much on memory architecture as it does on raw compute power.

When people compare GPUs, they usually look at cores, clock speeds, and theoretical performance numbers. Memory often becomes an afterthought. But in modern AI systems, memory is not just a supporting component. It determines whether a GPU can stay fully utilized or ends up waiting for data.

HBM and GDDR are both high-performance memory technologies, but they were built for different purposes. Understanding that difference helps explain why some GPUs dominate AI workloads while others are optimized for graphics and gaming.

What Is GDDR Memory?

GDDR is a high-speed graphics memory designed primarily for gaming and visual processing workloads.

It sits outside the GPU chip on the circuit board and connects through narrow but very fast memory channels. Over time, GDDR evolved into faster versions such as GDDR6 and GDDR6X, increasing clock speeds to deliver higher bandwidth. For graphics workloads that process frames in bursts, this design works extremely well.

However, GDDR relies heavily on frequency rather than width. As clock speeds rise, power consumption increases and signal integrity becomes harder to manage. For gaming, that trade-off is acceptable. For large-scale AI workloads, it starts to show limits.

What Is HBM Memory?

HBM is a stacked memory architecture built to deliver extremely high bandwidth by placing memory physically close to the GPU.

Instead of sitting off-chip like GDDR, HBM stacks multiple memory dies vertically and positions them next to the processor within the same package. This allows thousands of data connections between memory and compute units. The interface is much wider, even if clock speeds are lower.

The result is massive sustained throughput. HBM was designed specifically for workloads where thousands of parallel operations need data at the same time. That design choice makes it particularly well suited for modern AI systems.

Bandwidth vs Clock Speed: The Core Difference

The real difference between HBM and GDDR lies in how they deliver bandwidth, not just how fast they run.

GDDR increases bandwidth mainly by increasing clock frequency. HBM increases bandwidth by widening the data interface. One approach pushes signals faster through narrow lanes. The other moves more data at once through a much wider pathway.

For AI training and large inference systems, sustained throughput matters more than peak burst speed. Thousands of compute cores request data simultaneously. A wide interface keeps them fed more reliably than extremely high clock speeds alone.

Memory Behavior in AI Workloads

AI workloads stress memory differently than gaming workloads.

Training a large model requires repeated access to billions of parameters. These parameters are read, updated, and reused continuously across many parallel cores. If memory cannot deliver enough data per second, compute units sit idle.

GDDR can handle moderate AI workloads, especially smaller models or edge systems. But at scale, it becomes a limiting factor. HBM, by contrast, is built to maintain high utilization under heavy parallel demand.

Power Consumption and Efficiency

HBM generally delivers more bandwidth per watt than GDDR at large AI scale.

Because HBM sits closer to the GPU and uses lower clock speeds, it reduces signal travel distance and energy per bit transferred. GDDR must drive signals across longer board traces at higher frequencies, which increases power draw as bandwidth rises.

In small systems, the difference may not be dramatic. But in large data centers running thousands of accelerators, efficiency per watt becomes a major economic factor. This is one reason why AI-focused GPUs rely heavily on HBM.

Cost and Manufacturing Complexity

HBM is significantly more expensive and complex to manufacture than GDDR.

Stacking memory dies vertically, integrating them with processors, and using advanced packaging techniques increases production difficulty. Yields are lower and equipment requirements are higher. This raises cost per unit.

GDDR is simpler to produce and easier to scale in consumer markets. That is why gaming GPUs typically use GDDR, while AI accelerators justify the higher cost of HBM due to performance demands.

Which Memory Is Better for AI GPUs?

For large-scale AI training and data center workloads, HBM is generally the better choice.

Its wide interface, proximity to compute, and high sustained bandwidth allow GPUs to operate near full utilization. As models grow larger and parallelism increases, memory throughput becomes the dominant constraint, and HBM handles that constraint more effectively.

That said, GDDR is not obsolete. It remains suitable for smaller AI deployments, consumer GPUs, and workloads where extreme parallel bandwidth is not required. The decision depends on scale and workload type.

Future Outlook

The future of AI GPUs is increasingly tied to memory architecture decisions.

As models expand and hardware efficiency limits approach, memory bandwidth density will play an even larger role in system design. Manufacturers are investing heavily in advanced memory technologies because compute gains alone are no longer sufficient.

Whether HBM continues to dominate or evolves into new forms, one thing is clear: memory is no longer secondary in AI hardware. It is central to performance and scalability.

Conclusion

HBM and GDDR represent two different philosophies of memory design.

GDDR prioritizes high clock speeds and flexibility for graphics workloads. HBM prioritizes wide interfaces and sustained bandwidth for parallel compute. In AI systems where memory bottlenecks can limit performance, architecture matters more than raw frequency.

For serious AI workloads, especially at data center scale, HBM provides the structural advantages needed to keep GPUs fully utilized. As AI continues to grow, memory design will remain one of the most important factors shaping hardware performance.

FAQs

GDDR can handle small to moderate AI workloads, especially in consumer GPUs. However, for large-scale training or high-parallel inference systems, it may become a limiting factor due to bandwidth constraints.

AI GPUs use HBM because it delivers significantly higher sustained bandwidth and better efficiency per watt, allowing thousands of compute cores to operate simultaneously without waiting for data.

HBM is not replacing GDDR entirely. GDDR remains common in gaming and mid-range GPUs. HBM is primarily used in high-end AI accelerators where bandwidth density and efficiency are critical.