Introduction

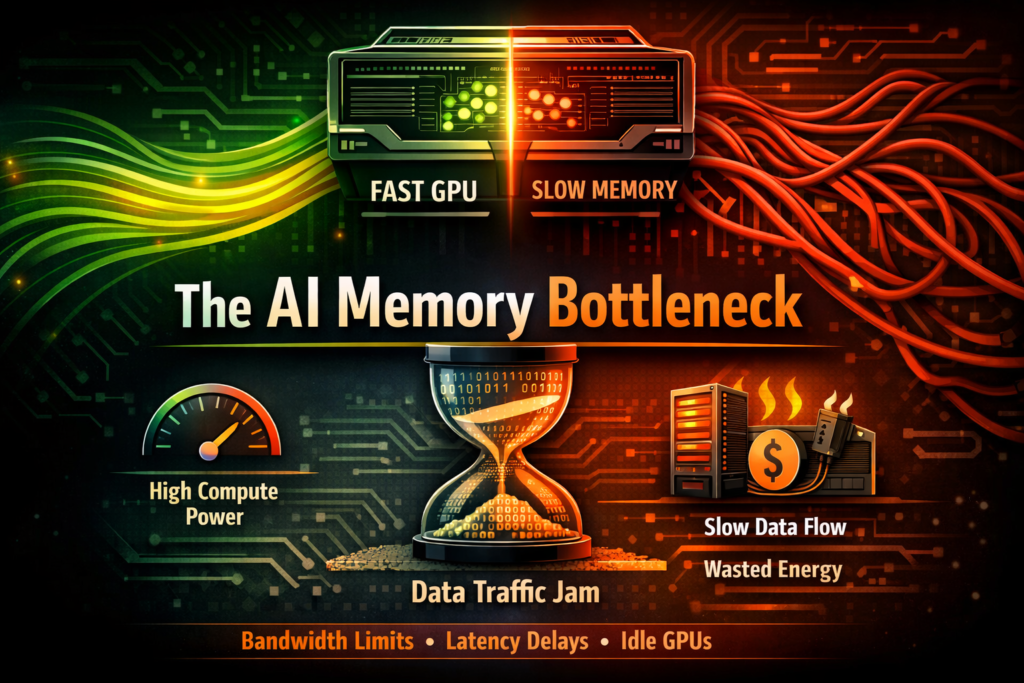

The AI memory bottleneck happens when processors are capable of more computation than memory systems can support.

For years, AI progress was measured by how many FLOPS a GPU could deliver. More cores meant more power. Bigger clusters meant faster training. But something changed quietly. GPUs kept getting faster, yet performance gains were not scaling proportionally.

The reason is simple. Compute is no longer the only constraint. Memory bandwidth has become the new ceiling. If data cannot move fast enough, even the most powerful GPU ends up waiting.

What Is a Memory Bottleneck?

A memory bottleneck occurs when memory cannot deliver data quickly enough to keep compute units fully active.

In traditional computing, this was manageable because workloads were sequential. AI workloads are different. Thousands of cores request data at the same time. When memory cannot serve those requests in parallel, queues form and performance drops.

The processor is ready to work. It just does not have the data.

How GPUs Actually Process AI Workloads

Modern AI GPUs are built for massive parallel execution.

Instead of processing one instruction at a time like a CPU, a GPU performs thousands of operations simultaneously. Neural networks rely on matrix multiplications and tensor operations that depend on continuous data flow.

If memory stalls, the entire pipeline slows down. Compute units do not partially work. They wait.

Latency vs Throughput: The Critical Difference

Latency measures how long it takes for data to arrive. Throughput measures how much data can be delivered per second.

Traditional memory systems were optimized for low latency. That worked for general-purpose software. AI systems care more about throughput because thousands of operations run in parallel.

In AI workloads, wide and sustained bandwidth matters more than shaving off small latency differences.

Why Traditional DRAM Creates the Bottleneck

Traditional DRAM was not designed for thousands of simultaneous data requests.

It sits off-chip, connected through narrower interfaces. As workloads scale, memory requests become serialized. This creates delays that multiply across thousands of cores.

The result is compute starvation. Expensive processors burn power while waiting for data.

Why Adding More GPUs Does Not Fix It

Adding more GPUs does not automatically solve a memory bottleneck.

If each GPU is already memory-bound, scaling out simply multiplies the bottleneck. You increase total compute capacity but not proportional memory bandwidth per processor.

This is why system architecture matters more than raw hardware count.

How High Bandwidth Memory Reduces the Bottleneck

High Bandwidth Memory uses vertical stacking to increase bandwidth and reduce data movement distance, which is central to how High Bandwidth Memory powers modern AI infrastructure.

By placing stacked memory close to the processor and enabling thousands of parallel data connections, HBM delivers sustained throughput that keeps compute units active.

It does not eliminate all constraints, but it shifts the performance ceiling higher.

The Real Impact: Performance, Cost, and Energy

When memory becomes the main limit, performance gains slow while energy consumption continues to rise.

Idle compute units still consume power. Data centers pay for hardware that is not fully utilized. This inefficiency has both economic and environmental consequences.

Fixing the memory bottleneck is not just about speed. It is about system efficiency.

Future Outlook

AI models continue to grow in size and complexity.

As parameter counts increase, memory bandwidth requirements grow with them. Without architectural changes, the memory bottleneck will define how far AI can scale.

The future of AI hardware will depend as much on memory innovation as on compute improvements.

Conclusion

The AI memory bottleneck is now one of the most important constraints in modern artificial intelligence systems.

Compute power is no longer the only limiting factor. Memory throughput, architecture, and data movement efficiency determine whether hardware reaches its potential.

Understanding this shift is essential for anyone working with AI infrastructure

FAQs

The AI memory bottleneck occurs when memory systems cannot deliver data fast enough to keep GPU compute units fully utilized.

AI workloads require massive parallel data access. If memory bandwidth is insufficient, processors wait for data instead of computing.

No. Faster GPUs can worsen the problem if memory bandwidth does not scale proportionally.